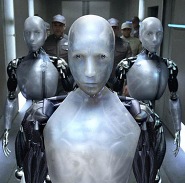

3 laws unsafe

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given to it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or the Second Law. Actually, in the end of 20th century, the 3 laws of robotics has been extended to following :

The Meta-Law: A robot may not act unless its actions are subject to the Laws of Robotics

Law Zero: A robot may not injure humanity, or, through inaction, allow humanity to come to harm

Law One: A robot may not injure a human being, or, through inaction, allow a human being to come to harm, unless this would violate a higher-order Law

Law Two: A robot must obey orders given it by human beings, except where such orders would conflict with a higher-order Law A robot must obey orders given it by superordinate robots, except where such orders would conflict with a higher-order Law

Law Three: A robot must protect the existence of a superordinate robot as long as such protection does not conflict with a higher-order Law A robot must protect its own existence as long as such protection does not conflict with a higher-order Law Law Four A robot must perform the duties for which it has been programmed, except where that would conflict with a higher-order law

The Procreation Law: A robot may not take any part in the design or manufacture of a robot unless the new robot’s actions are subject to the Laws of Robotics The laws are well and good, but it does not seem to me that they are so protected that a glitch in a machine or a hacking job may not remove or alter the rules. The more complicated a machine gets the more likely they are to develop unexpected difficulties. Look at the amount of difficulties that are inherent in windows operating systems. The software will develop errors simply by turning the machine on and off. What kind of errors may we expect in a machine that emulates and maybe even surpasses our human abilities? While the laws may work on 99% of the robots that are manufactured with them, what about the 1% or smaller that may have difficulties? A few questions regarding Laws: Let us consider that in this (hypothetical) case, the US have lots of robots operating their weapons, while Russians are ignorant of the technology, but well aware of the seven laws of American robots. Should Russians decide to launch, what would the robots’ reaction be? Of course, they would be ordered to answer back; but launching a nuclear weapon, even in this situation, would still injure humanity (and would certainly not stop the harm already done by the first launch). Therefore, Law One would stop these robots from firing back; even more, suppose the robots are not the ones responsible for firing; they would still be bound to stop anyone from doing so, and attack their very creators in the process. Who would want any of this, I wonder?

1 Comments:

The loopholes & connundrums inherent in the system are what makes Asimov's robot stories so interesting.

As to the "system errors" problem, though, Asimov firmly stipulated that, by design, any flaws that were sufficiently serious to allow a laws-violation would also be serious enough to render the robot non-functional.

Perhaps you can think of it as analogous to the part of your brain that controls your heartbeat.

Post a Comment

<< Home